The Column gives you the opportunity to ask our experts about their work, and how it shapes the built environment.

Last month, you submitted your questions for Sherif Eltarabishy, who is a design systems analyst at Foster + Partners, working within the Applied R+D team. Sherif is driving cutting-edge applied machine learning through full-stack software development, geometry, optimisation, XR and digital fabrication.

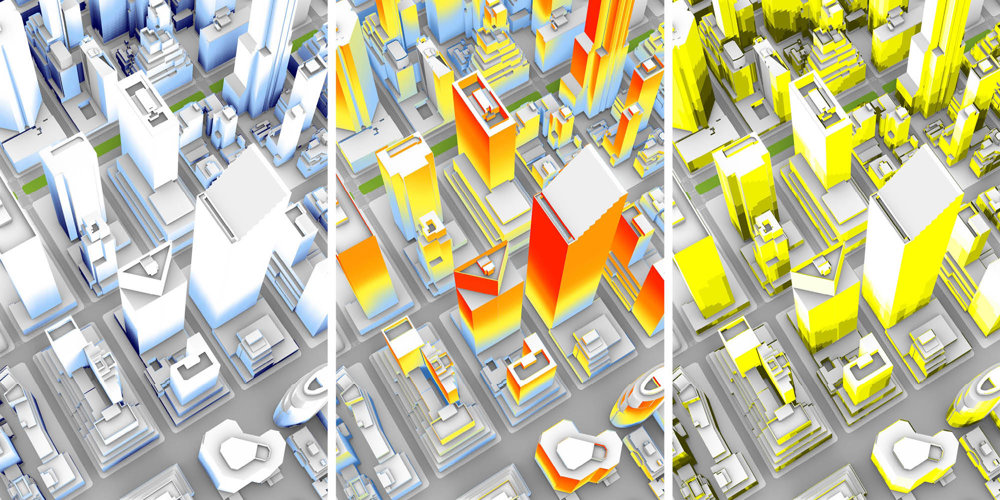

His responses span a range of topics, including the importance of process mapping, challenges associated with GenAI, and the newly launched Cyclops plugin that turbocharges ray tracing simulations to enhance sustainable design.